Dynatrace accelerates business transformation with new AI observability solution

Dynatrace

JANUARY 31, 2024

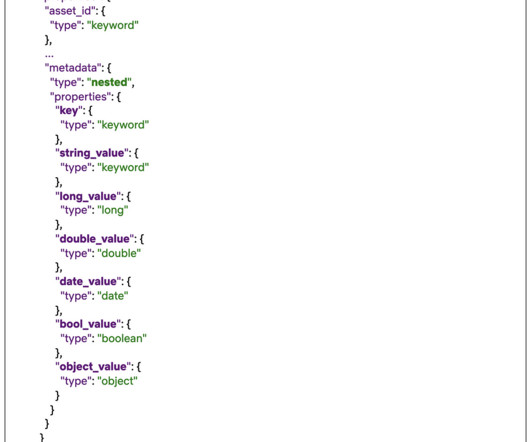

The RAG process begins by summarizing and converting user prompts into queries that are sent to a search platform that uses semantic similarities to find relevant data in vector databases, semantic caches, or other online data sources. Development and demand for AI tools come with a growing concern about their environmental cost.

Let's personalize your content