Load Balancing: Handling Heterogeneous Hardware

Uber Engineering

MAY 2, 2024

Learn about how Uber changed it’s RPC load balancing within Service Mesh to mitigate hardware heterogeneity.

This site uses cookies to improve your experience. By viewing our content, you are accepting the use of cookies. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country we will assume you are from the United States. View our privacy policy and terms of use.

Hardware Related Topics

Hardware Related Topics

Uber Engineering

MAY 2, 2024

Learn about how Uber changed it’s RPC load balancing within Service Mesh to mitigate hardware heterogeneity.

DZone

JULY 10, 2024

Viking Enterprise Solutions (VES) , a division of Sanmina Corporation, stands at the forefront of addressing these challenges with its innovative hardware and software solutions. As a product division of Sanmina, a $9 billion public company, VES leverages decades of manufacturing expertise to deliver cutting-edge data center solutions.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

DZone

JULY 7, 2024

Hardware Configuration Recommendations CPU Ensure the BIOS settings are in non-power-saving mode to prevent the CPU from throttling. For servers using Intel CPUs that are not deployed in a multi-instance environment, it is recommended to disable the vm.zone_reclaim_mode parameter.

DZone

MARCH 18, 2024

Previously, proprietary hardware performed functions like routers, firewalls, load balancers, etc. In IBM Cloud, we have proprietary hardware like the FortiGate firewall that resides inside IBM Cloud data centers today. These hardware functions are packaged as virtual machine images in a VNF.

SQL Server Performance

MARCH 12, 2019

An important concern in optimizing the hardware platform is hardware components that restrict performance, known as bottlenecks. Quite often, the problem isn’t correcting performance bottlenecks as much as it is identifying them in the first place. Start with obtaining a performance baseline.

SQL Shack

APRIL 14, 2020

SQL Server Performance Tuning can be a difficult assignment, especially when working with a massive database where even the minor change can raise a significant impact on the existing query performance. Performance Tuning always plays a vital role in database performance as well as product performance.

The Polyglot Developer

MARCH 26, 2018

Over the past month or so, in my free time, I’ve been working towards creating an affordable hardware wallet for various cryptocurrencies such as Bitcoin. Right now many cryptocurrency enthusiasts are using the Ledger Nano S hardware wallet, but those are very expensive and rarely in supply.

DZone

AUGUST 7, 2023

While some distributions are aimed at experienced Linux users, there are distributions that cater to the needs of beginners or users with older hardware. These lightweight distributions are tailored to run on older hardware or low-powered devices while still providing users a robust and customizable experience.

DZone

JANUARY 3, 2024

Through the use of virtualization technology, multiple operating systems can now run on a single physical machine, revolutionizing the way we use computer hardware.

The Polyglot Developer

JANUARY 7, 2019

and jQuery , which demonstrated how to use hardware keys as a means of universal two-factor (U2F) authentication. The post Implementing U2F Authentication With Hardware Keys Using Node.js Not too long ago I had written a tutorial titled, U2F Authentication with a YubiKey Using Node.js and use Vue.js , which is a modern web framework.

Dynatrace

OCTOBER 23, 2023

It enables multiple operating systems to run simultaneously on the same physical hardware and integrates closely with Windows-hosted services. Therefore, they experience how the application code functions and how the application operations depend on the underlying hardware resources and the operating system managed by Hyper-V.

DZone

SEPTEMBER 26, 2023

Startups, for instance, can get started without buying expensive hardware and scale flexibly. There is no doubt that the cloud has changed the way we run our software. Also, the cloud has enabled novel solutions such as serverless, managed Kubernetes and Docker, or edge functions.

DZone

FEBRUARY 23, 2023

Containerization simplifies the software development process because it eliminates dealing with dependencies and working with specific hardware. In the past few years, there has been a growing number of organizations and developers joining the Docker journey. But, it can be quite confusing how to run a container on the cloud.

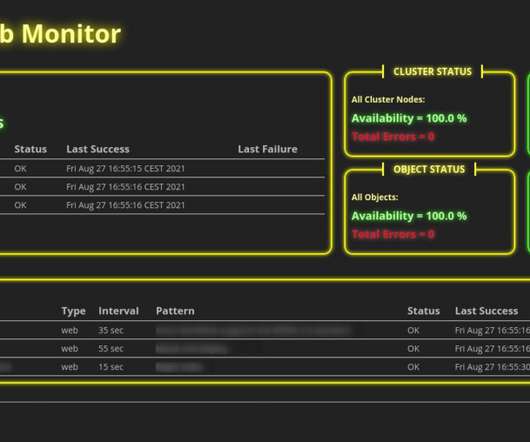

DZone

SEPTEMBER 29, 2021

For example, you can monitor the behavior of your applications, the hardware usage of your server nodes, or even the network traffic between servers. One prominent solution is the open-source tool Nagios which allows you to monitor hardware in every detail.

Dynatrace

JULY 24, 2024

By leveraging Dynatrace observability on Red Hat OpenShift running on Linux, you can accelerate modernization to hybrid cloud and increase operational efficiencies with greater visibility across the full stack from hardware through application processes.

DZone

JANUARY 23, 2022

Hardware - servers/storage hardware/software faults such as disk failure, disk full, other hardware failures, servers running out of allocated resources, server software behaving abnormally, intra DC network connectivity issues, etc. Redundancy in power, network, cooling systems, and possibly everything else relevant.

DZone

MARCH 20, 2024

Embedded within the Linux kernel, KVM empowers the creation of VMs with their virtualized hardware components, such as CPUs, memory, storage, and network cards, essentially mimicking a machine. KVM functions as a type 1 hypervisor, delivering performance similar to hardware—an edge over type 2 hypervisors.

DZone

JANUARY 30, 2024

These CIs include hardware, software, network devices, and other elements critical to an organization's IT operations. Understanding the Essence of CMDB A Configuration Management Database (CMDB) is a centralized repository that stores information about configuration items (CIs) in an IT environment.

Dynatrace

MARCH 20, 2024

Vulnerabilities or hardware failures can disrupt deployments and compromise application security. For instance, if a Kubernetes cluster experiences a hardware failure during deployment, it can lead to service disruptions and affect the user experience.

DZone

JANUARY 27, 2024

The Trusted Platform Module (TPM) is an important component in modern computing since it provides hardware-based security and enables a variety of security features. TPM chips have grown in relevance in both physical and virtual contexts, where they play a critical role in data security and preserving the integrity of computer systems.

DZone

MAY 1, 2023

In modern production environments, there are numerous hardware and software hooks that can be adjusted to improve latency and throughput. To achieve this level of performance, such systems require dedicated CPU cores that are free from interruptions by other processes, together with wider system tuning.

Dynatrace

SEPTEMBER 20, 2023

They’re really focusing on hardware and software systems together,” Dunkin said. How do you make hardware and software both secure by design?” The DOE supports the national cybersecurity strategy’s collective defense initiatives. The principle of “security by design” plays a major role in these efforts.

DZone

NOVEMBER 22, 2023

From hardware and software investments to ongoing maintenance and upgrades, every component contributes to the grand TCO puzzle. In today's fast-paced digital landscape, understanding the expenses involved in developing, deploying, and maintaining a digital product is crucial for businesses and individuals.

DZone

JANUARY 16, 2024

These early computing machines, known as mainframes, required a system to manage their hardware resources efficiently. History of Operating Systems Early Beginnings of Operating Systems The genesis of operating systems dates back to the emergence of large-scale computers in the 1950s and 1960s.

DZone

JANUARY 16, 2024

This virtual representation, also known as a virtual instance or virtual machine, functions independently of the real resources beneath it.

The Morning Paper

MAY 12, 2019

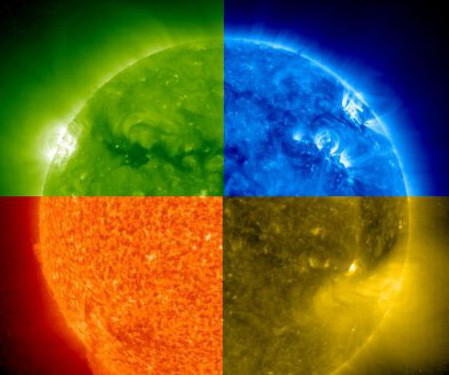

An open-source benchmark suite for microservices and their hardware-software implications for cloud & edge systems Gan et al., The paper examines the implications of microservices at the hardware, OS and networking stack, cluster management, and application framework levels, as well as the impact of tail latency. ASPLOS’19.

DZone

MAY 16, 2023

This allows users to interact with any hardware resource through a digital interface. Virtualization is a technology that can create servers, storage devices, and networks all in virtual space. Devices connect to a virtual network to share data and resources.

DZone

NOVEMBER 8, 2023

Microsoft’s Hyper-V is a top virtualization platform that enables companies to maximize the use of their hardware resources. Virtualization has emerged as a crucial tool for businesses looking to manage their IT infrastructure with greater efficiency, flexibility, and cost-effectiveness in today’s rapidly changing digital environment.

Dynatrace

JULY 8, 2024

Its simplicity, scalability, and compatibility with a wide range of hardware make it an ideal choice for network management across diverse environments. Managing SNMP devices at scale can be challenging SNMP (Simple Network Management Protocol) provides a standardized framework for monitoring and managing devices on IP networks.

DZone

NOVEMBER 10, 2023

By leveraging Hyper-V, cloud service providers can optimize hardware utilization by running multiple virtual machines (VMs) on a single physical server. Hyper-V: Enabling Cloud Virtualization Hyper-V serves as a fundamental component in cloud computing environments, enabling efficient and flexible virtualization of resources.

Dynatrace

FEBRUARY 2, 2022

This means you no longer have to procure new hardware, which can be a time-consuming and expensive process. You must procure hardware, install the OS on the server, install the application, and configure it. Additionally, the investment for on-prem installations is a lot more expensive to manage over time.

DZone

APRIL 21, 2023

Test tools are software or hardware designed to test a system or application. Various test tools are available for different types of testing, including unit testing, integration testing, and more. Some test tools are intended for developers during the development process, while others are designed for quality assurance teams or end users.

DZone

DECEMBER 20, 2023

This ground-breaking method enables users to run multiple virtual machines on a single physical server, increasing flexibility, lowering hardware costs, and improving efficiency. Mini PCs have become effective virtualization tools in this setting, providing a portable yet effective solution for a variety of applications.

Scalegrid

NOVEMBER 25, 2021

Two of the main reasons we hear are often due to migration of hardware, or the need to split data between servers. Migrating ScaleGrid for Redis™ data from one server to another is a common requirement that we hear from our customers. Typically, you want to migrate with minimal downtime while using the standard Redis […].

DZone

APRIL 26, 2022

This has not only led to AI acceleration being incorporated into common chip architectures such as CPUs, GPUs, and FPGAs but also mushroomed a class of dedicated hardware AI accelerators specifically designed to accelerate artificial neural networks and machine learning applications.

DZone

FEBRUARY 8, 2022

But what is the metric that shows service hardware monopolization by a group of users? Quality metrics contain: The ratio of successfully processed requests. Distribution of processing time between requests. Number of requests dependent curves. This metric absence reduces the quality and user satisfaction of the service.

DZone

JULY 26, 2022

In nutshell, Compatibility Test is a kind of Software test to confirm whether your software is competent enough of running on various Mobile devices, operating systems, hardware, applications, or network environments

Dynatrace

MARCH 8, 2021

We do our best to provide support for all popular hardware and OS platforms that are used by our customers for the hosting of their business services. Please check our detailed OneAgent support matrix to learn about feature availability on specific hardware and software platforms. What about ActiveGates? What about Dynatrace Managed?

Dynatrace

JULY 11, 2024

They’ve gone from just maintaining their organization’s hardware and software to becoming an essential function for meeting strategic business objectives. Business observability is emerging as the answer. The ongoing drive for digital transformation has led to a dramatic shift in the role of IT departments.

DZone

OCTOBER 6, 2021

Differences in OS, screen size, screen density, and hardware can all affect how an app behaves and impact the user experience. When testing the performance of a native Android or iOS app, choosing the right set of devices is critical for maximizing your chances of success.

DZone

OCTOBER 2, 2023

An RTOS is an operating system designed with a specific purpose in mind: to manage hardware resources and execute tasks within a stringent time frame. This article delves into the world of RTOS, examining its core, contrasting it with general-purpose operating systems, and exploring its relevance in today's technologically advanced landscape.

Dynatrace

DECEMBER 8, 2021

This centralization means all aspects of the system can share underlying hardware, are generally written in the same programming language, and the operating system level monitoring and diagnostic tools can help developers understand the entire state of the system.

DZone

JANUARY 25, 2023

Imagine you can benefit from an up-to-date and fully-loaded operating system on a 90s hardware configuration of 512 MB and 1-core CPU. Linux could be a fantastic choice for your next cloud server. Apart from technical benefits, it is the cheapest option to have, so you may have decided to run your services on it.

Dynatrace

JUNE 10, 2024

Finally, observability helps organizations understand the connections between disparate software, hardware, and infrastructure resources. For example, updating a piece of software might cause a hardware compatibility issue, which translates to an infrastructure challenge.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content