The Three Cs: Concatenate, Compress, Cache

CSS Wizardry

OCTOBER 16, 2023

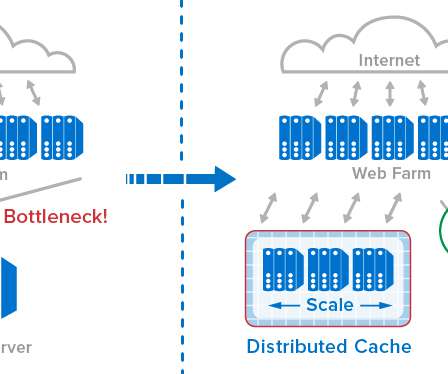

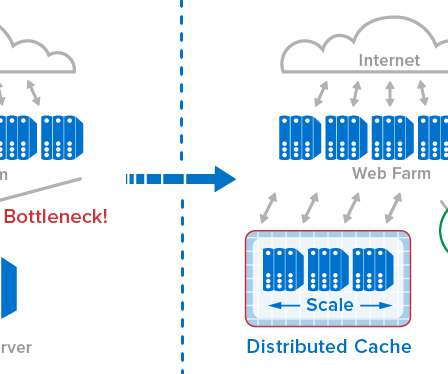

Caching them at the other end: How long should we cache files on a user’s device? Cache This is the easy one. Caching is something I’ve been a little obsessed with lately , but for static assets as we’re discussing today, we don’t need to know much other than: cache everything as aggressively as possible.

Let's personalize your content