How to Optimize CPU Performance Through Isolation and System Tuning

DZone

MAY 1, 2023

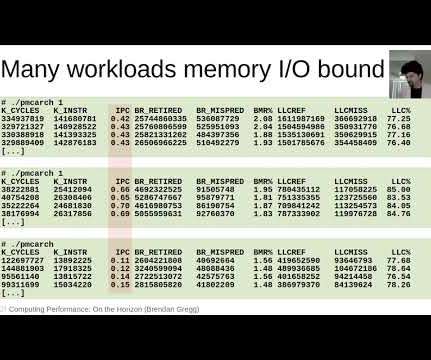

CPU isolation and efficient system management are critical for any application which requires low-latency and high-performance computing. In modern production environments, there are numerous hardware and software hooks that can be adjusted to improve latency and throughput.

Let's personalize your content