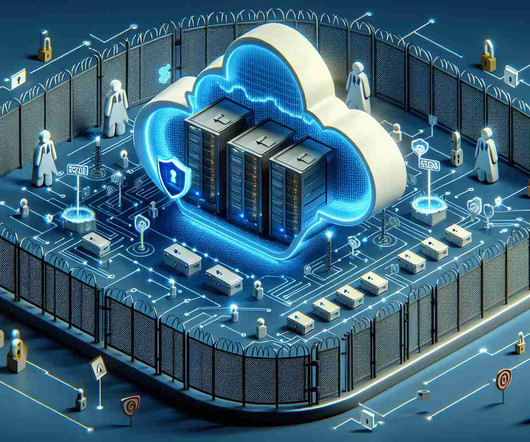

Key Advantages of DBMS for Efficient Data Management

Scalegrid

JANUARY 5, 2024

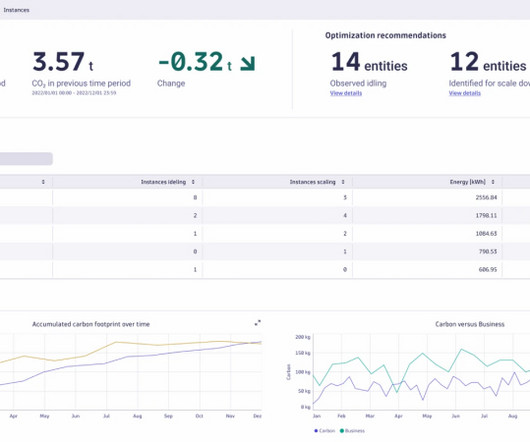

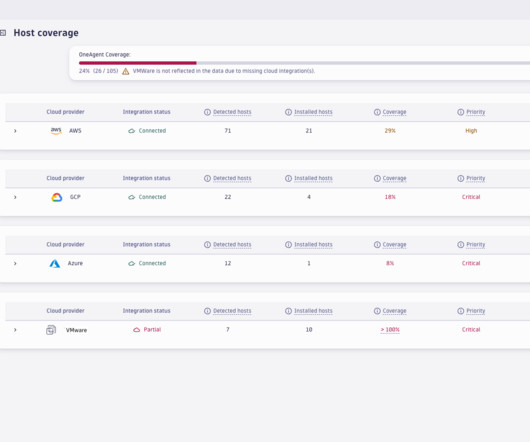

Enhanced data security, better data integrity, and efficient access to information. Despite initial investment costs, DBMS presents long-term savings and improved efficiency through automated processes, efficient query optimizations, and scalability, contributing to enhanced decision-making and end-user productivity.

Let's personalize your content