Key Advantages of DBMS for Efficient Data Management

Scalegrid

JANUARY 5, 2024

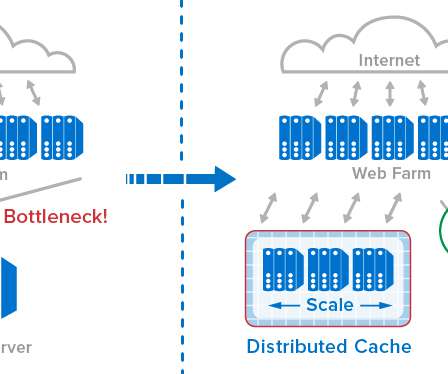

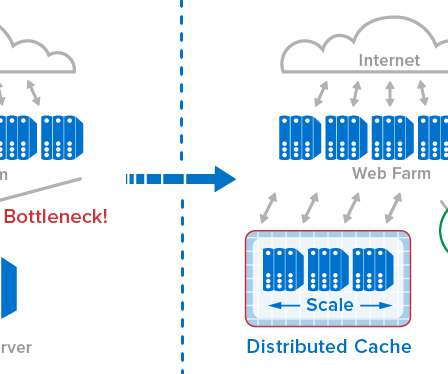

Enhanced data security, better data integrity, and efficient access to information. This article cuts through the complexity to showcase the tangible benefits of DBMS, equipping you with the knowledge to make informed decisions about your data management strategies. What are the key advantages of DBMS?

Let's personalize your content