Optimizing CDN Architecture: Enhancing Performance and User Experience

IO River

NOVEMBER 2, 2023

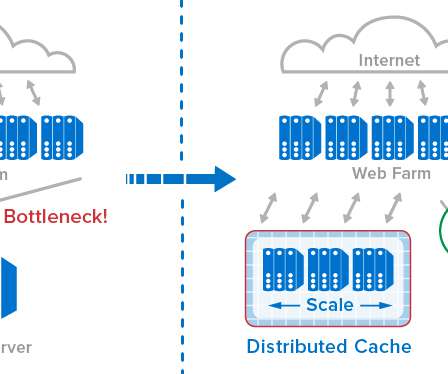

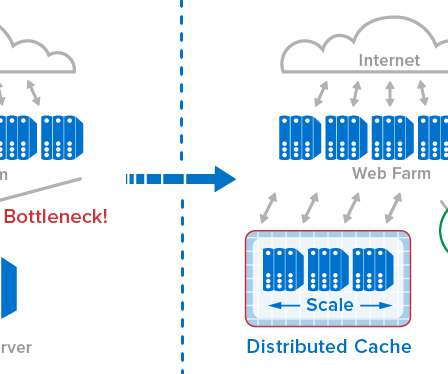

CDNs cache content on edge servers distributed globally, reducing the distance between users and the content they want.CDNs use load-balancing techniques to distribute incoming traffic across multiple servers called Points of Presence (PoPs) which distribute content closer to end-users and improve overall performance.

Let's personalize your content