For your eyes only: improving Netflix video quality with neural networks

The Netflix TechBlog

NOVEMBER 17, 2022

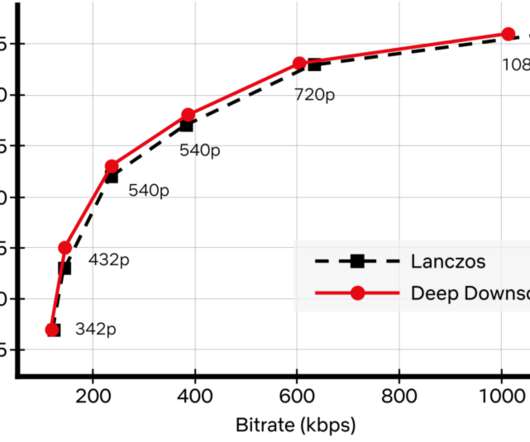

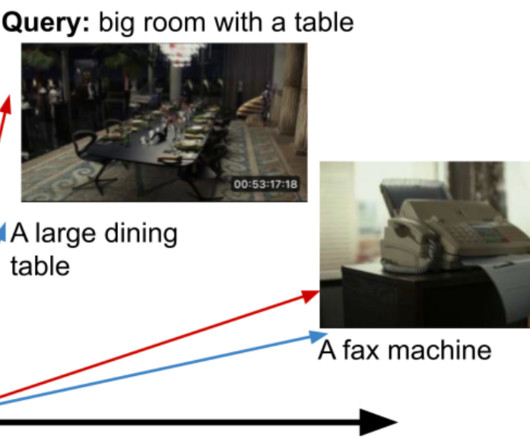

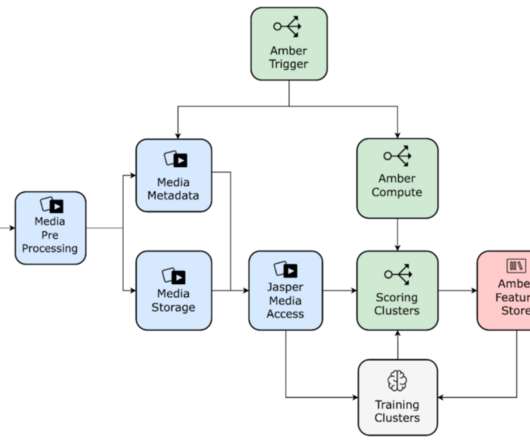

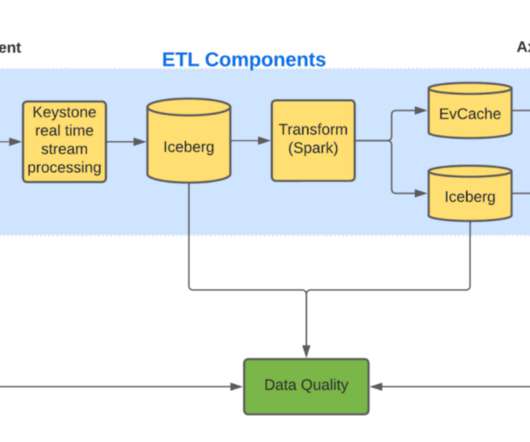

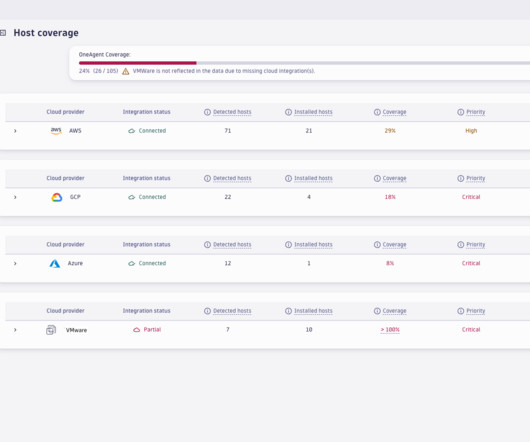

Recently, we added another powerful tool to our arsenal: neural networks for video downscaling. In this tech blog, we describe how we improved Netflix video quality with neural networks, the challenges we faced and what lies ahead. How can neural networks fit into Netflix video encoding?

Let's personalize your content