Best practices for Fluent Bit 3.0

Dynatrace

MAY 7, 2024

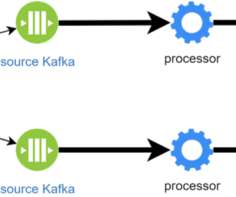

Fluent Bit is a telemetry agent designed to receive data (logs, traces, and metrics), process or modify it, and export it to a destination. However, you can also use Fluent Bit as a processor because you can perform various actions on the data. Ask yourself, how much data should Fluent Bit process? What’s new in Fluent Bit 3.0

Let's personalize your content