What is IT operations analytics? Extract more data insights from more sources

Dynatrace

MAY 1, 2023

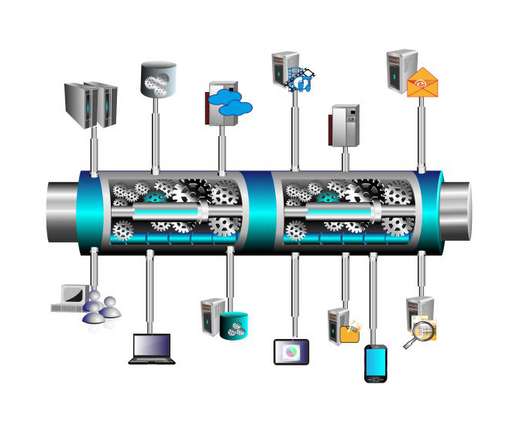

IT operations analytics is the process of unifying, storing, and contextually analyzing operational data to understand the health of applications, infrastructure, and environments and streamline everyday operations. Here are the six steps of a typical ITOA process : Define the data infrastructure strategy.

Let's personalize your content