Key Advantages of DBMS for Efficient Data Management

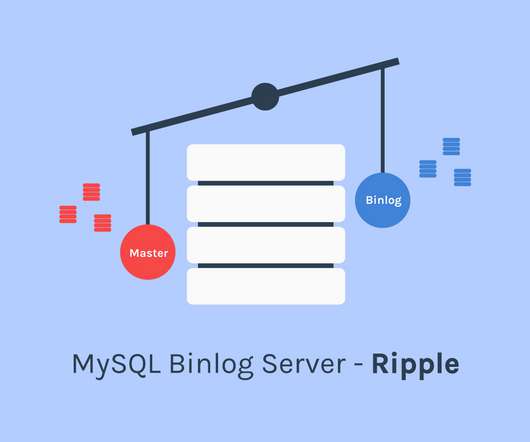

Scalegrid

JANUARY 5, 2024

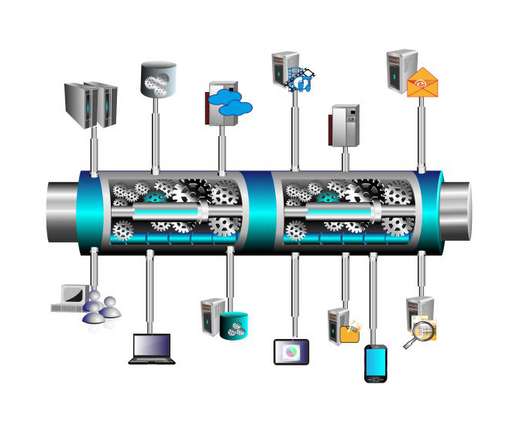

Enhanced data security, better data integrity, and efficient access to information. If you’re considering a database management system, understanding these benefits is crucial. Understanding Database Management Systems (DBMS) A Database Management System (DBMS) assists users in creating and managing databases.

Let's personalize your content