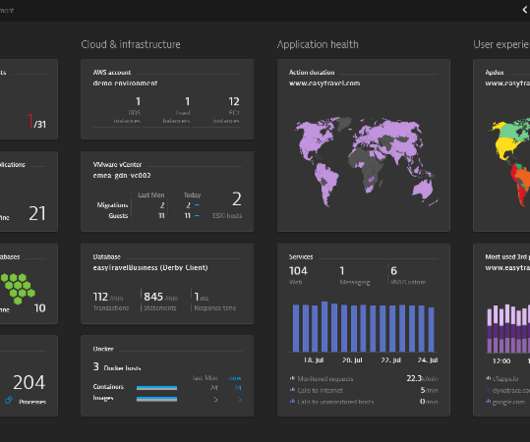

Exploratory analytics and collaborative analytics capabilities democratize insights across teams

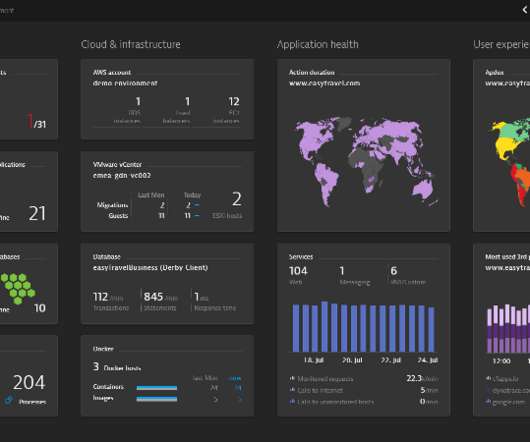

Dynatrace

APRIL 25, 2023

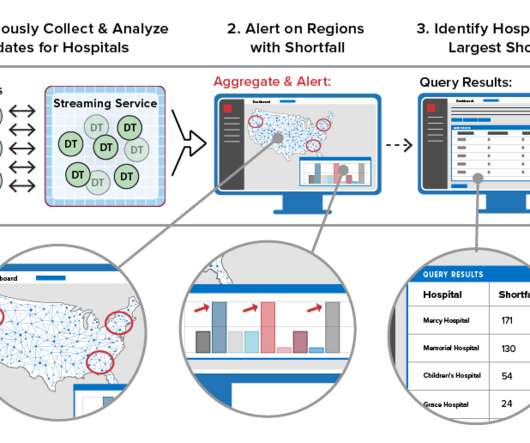

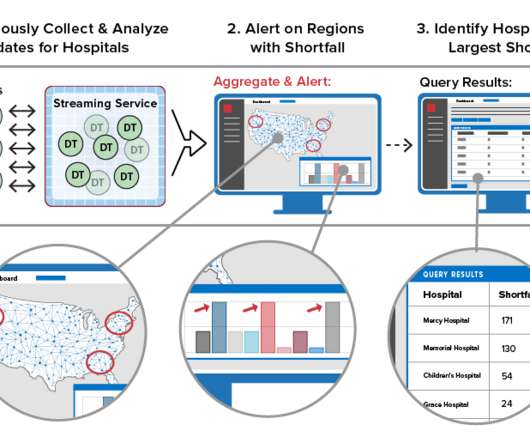

Having access to large data sets can be helpful, but only if organizations are able to leverage insights from the information. These analytics can help teams understand the stories hidden within the data and share valuable insights. “That is what exploratory analytics is for,” Schumacher explains.

Let's personalize your content