Ensuring the Successful Launch of Ads on Netflix

The Netflix TechBlog

JUNE 1, 2023

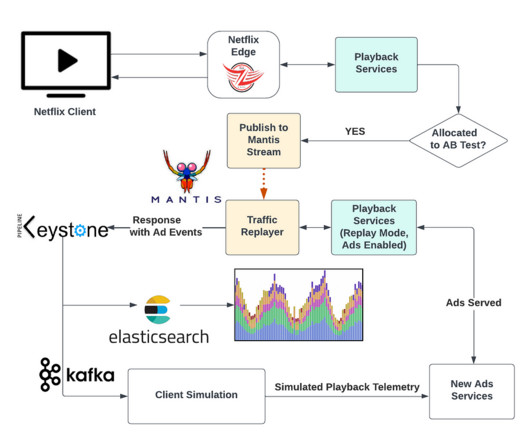

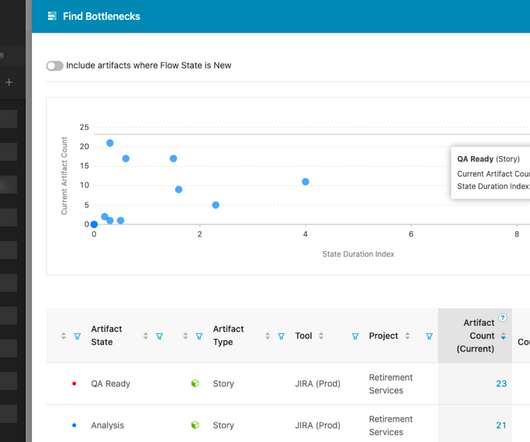

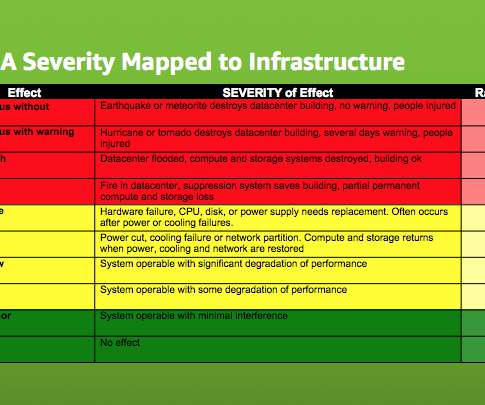

In this blog post, we’ll discuss the methods we used to ensure a successful launch, including: How we tested the system Netflix technologies involved Best practices we developed Realistic Test Traffic Netflix traffic ebbs and flows throughout the day in a sinusoidal pattern. Basic with ads was launched worldwide on November 3rd.

Let's personalize your content