Test ETag Browser Caching with cURL Requests

The Polyglot Developer

SEPTEMBER 9, 2019

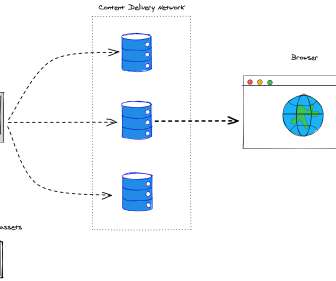

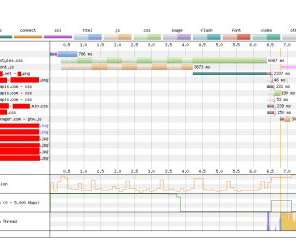

Recently I’ve been playing around with Netlify and as a result I’m becoming more familiar with caching strategies commonly found with content delivery networks (CDN). One such strategy makes use of ETag identifiers for web resources.

Let's personalize your content