Best practices and key metrics for improving mobile app performance

Dynatrace

DECEMBER 13, 2023

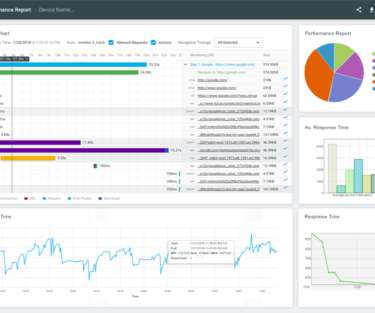

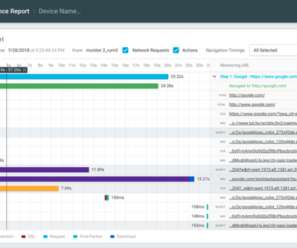

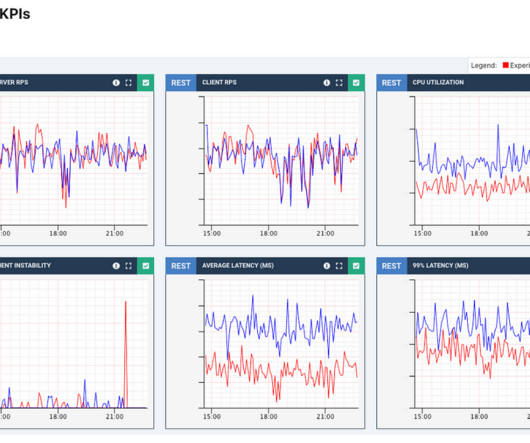

Mobile applications (apps) are an increasingly important channel for reaching customers, but the distributed nature of mobile app platforms and delivery networks can cause performance problems that leave users frustrated, or worse, turning to competitors. Load time and network latency metrics. Minimize network requests.

Let's personalize your content