The Three Cs: Concatenate, Compress, Cache

CSS Wizardry

OCTOBER 16, 2023

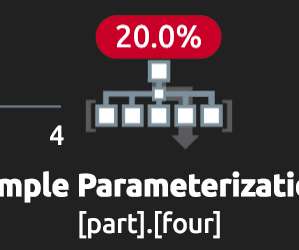

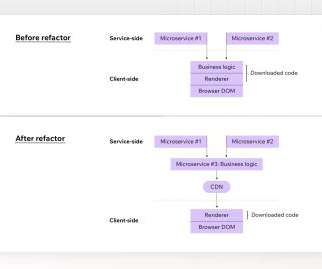

What is the availability, configurability, and efficacy of each? ?️ Caching them at the other end: How long should we cache files on a user’s device? In our specific examples above, the one-big-file pattern incurred 201ms of latency, whereas the many-files approach accumulated 4,362ms by comparison. main.af8a22.css

Let's personalize your content