Dynatrace accelerates business transformation with new AI observability solution

Dynatrace

JANUARY 31, 2024

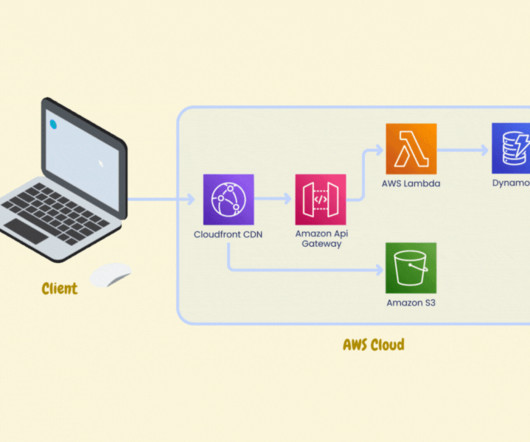

Retrieval-augmented generation emerges as the standard architecture for LLM-based applications Given that LLMs can generate factually incorrect or nonsensical responses, retrieval-augmented generation (RAG) has emerged as an industry standard for building GenAI applications. million AI server units annually by 2027, consuming 75.4+

Let's personalize your content