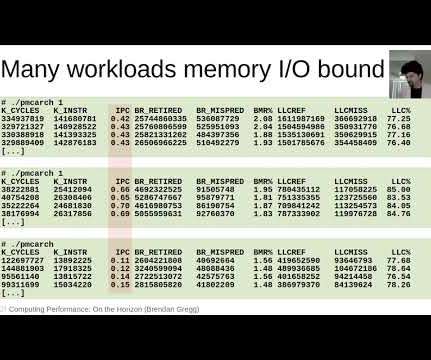

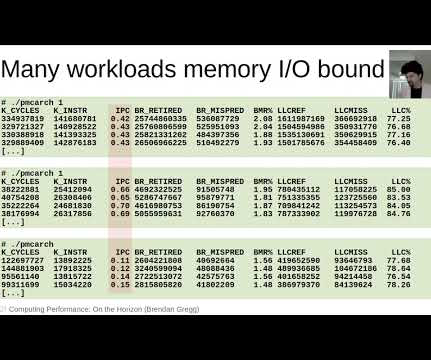

USENIX LISA2021 Computing Performance: On the Horizon

Brendan Gregg

JULY 4, 2021

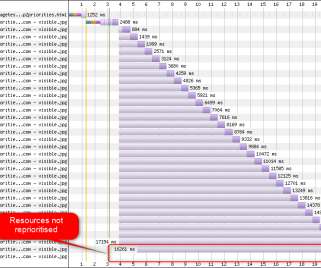

AWS Graviton2); for memory with the arrival of DDR5 and High Bandwidth Memory (HBM) on-processor; for storage including new uses for 3D Xpoint as a 3D NAND accelerator; for networking with the rise of QUIC and eXpress Data Path (XDP); and so on. Ford, et al., “TCP

Let's personalize your content