A Comprehensive Guide: Installing Docker, Running Containers, Managing Storage, and Setting up Networking

DZone

JANUARY 8, 2024

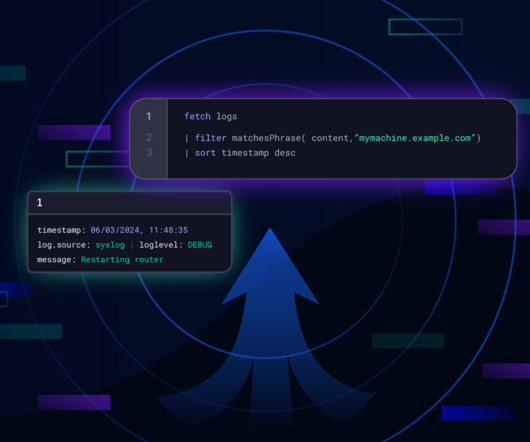

This comprehensive guide will walk you through the crucial steps of setting up networking, managing storage, running containers, and installing Docker. Thanks to Docker, a leading containerization platform, applications can be packaged and distributed more easily in portable, isolated environments.

Let's personalize your content