Dynatrace supports the newly released AWS Lambda Response Streaming

Dynatrace

APRIL 7, 2023

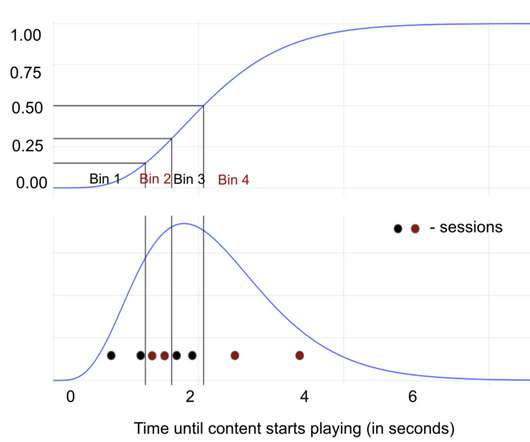

Customers can use AWS Lambda Response Streaming to improve performance for latency-sensitive applications and return larger payload sizes. The difference is the owner of the Lambda function does not have to worry about provisioning and managing servers. Return larger payload sizes.

Let's personalize your content