Architectural Insights: Designing Efficient Multi-Layered Caching With Instagram Example

DZone

FEBRUARY 27, 2024

Leveraging this hierarchical structure can significantly reduce latency and improve overall performance.

This site uses cookies to improve your experience. By viewing our content, you are accepting the use of cookies. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country we will assume you are from the United States. View our privacy policy and terms of use.

DZone

FEBRUARY 27, 2024

Leveraging this hierarchical structure can significantly reduce latency and improve overall performance.

The Netflix TechBlog

SEPTEMBER 29, 2022

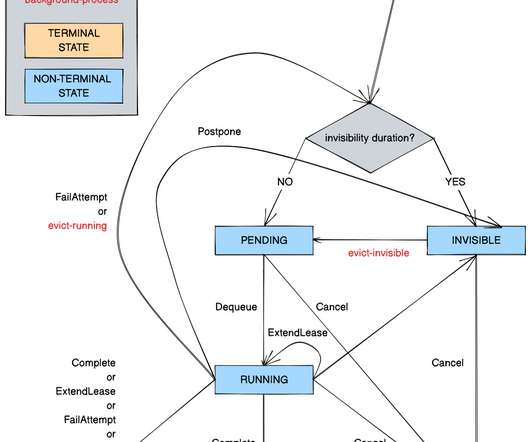

Timestone: Netflix’s High-Throughput, Low-Latency Priority Queueing System with Built-in Support for Non-Parallelizable Workloads by Kostas Christidis Introduction Timestone is a high-throughput, low-latency priority queueing system we built in-house to support the needs of Cosmos , our media encoding platform.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

The Netflix TechBlog

MARCH 7, 2024

The Machine Learning Platform (MLP) team at Netflix provides an entire ecosystem of tools around Metaflow , an open source machine learning infrastructure framework we started, to empower data scientists and machine learning practitioners to build and manage a variety of ML systems. ETL workflows), as well as downstream (e.g.

DZone

JUNE 22, 2023

It involves a combination of techniques and best practices aimed at reducing latency, improving user experience, and increasing the overall efficiency of the system. API performance optimization is the process of improving the speed, scalability, and reliability of APIs.

Scalegrid

FEBRUARY 8, 2024

A distributed storage system is foundational in today’s data-driven landscape, ensuring data spread over multiple servers is reliable, accessible, and manageable. This guide delves into how these systems work, the challenges they solve, and their essential role in businesses and technology.

Scalegrid

JANUARY 25, 2024

Key Takeaways Critical performance indicators such as latency, CPU usage, memory utilization, hit rate, and number of connected clients/slaves/evictions must be monitored to maintain Redis’s high throughput and low latency capabilities. These essential data points heavily influence both stability and efficiency within the system.

Dynatrace

SEPTEMBER 13, 2023

This is a set of best practices and guidelines that help you design and operate reliable, secure, efficient, cost-effective, and sustainable systems in the cloud. The framework comprises six pillars: Operational Excellence, Security, Reliability, Performance Efficiency, Cost Optimization, and Sustainability.

Dynatrace

JANUARY 31, 2024

GenAI is prone to erratic behavior due to unforeseen data scenarios or underlying system issues. Dynatrace provides end-to-end observability of AI applications As AI systems grow in complexity, a holistic approach to the observability of AI-powered applications becomes even more crucial.

Scalegrid

MARCH 28, 2024

Redis Data Types and Structures The design of Redis’s data structures emphasizes versatility. Snapshots provide point-in-time captures of the dataset, which are efficient for recovery on startup. It is designed to cache plain text values, offering fast read and write access to frequently accessed data.

Dynatrace

JANUARY 17, 2024

As organizations turn to artificial intelligence for operational efficiency and product innovation in multicloud environments, they have to balance the benefits with skyrocketing costs associated with AI. An AI observability strategy—which monitors IT system performance and costs—may help organizations achieve that balance.

Scalegrid

JANUARY 8, 2024

This article delves into the specifics of how AI optimizes cloud efficiency, ensures scalability, and reinforces security, providing a glimpse at its transformative role without giving away extensive details. Using AI for Enhanced Cloud Operations The integration of AI in cloud computing is enhancing operational efficiency in several ways.

The Netflix TechBlog

MARCH 4, 2024

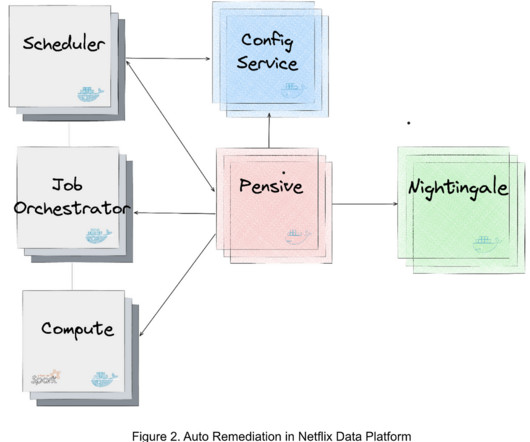

We have deployed Auto Remediation in production for handling memory configuration errors and unclassified errors of Spark jobs and observed its efficiency and effectiveness (e.g., For efficient error handling, Netflix developed an error classification service, called Pensive, which leverages a rule-based classifier for error classification.

Dynatrace

JULY 24, 2023

In the world of DevOps and SRE, DevOps automation answers the undeniable need for efficiency and scalability. This evolution in automation, referred to as answer-driven automation, empowers teams to address complex issues in real time, optimize workflows, and enhance overall operational efficiency.

The Morning Paper

OCTOBER 11, 2020

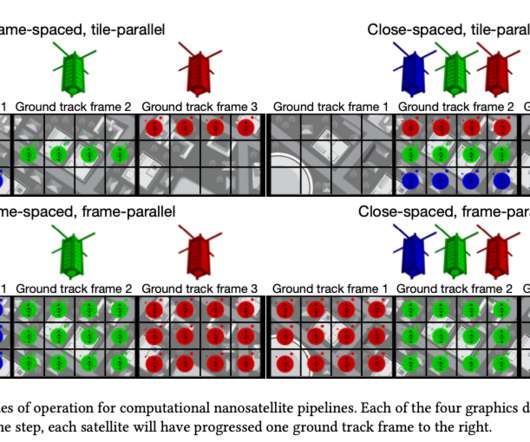

Orbital edge computing: nanosatellite constellations as a new class of computer system , Denby & Lucia, ASPLOS’20. Only space system architects don’t call it request-response, they call it a ‘ bent-pipe architecture.’. The old ground-initiated command-and-control style systems aren’t going to work for these finer-grained systems.

Scalegrid

MARCH 22, 2024

They can also bolster uptime and limit latency issues or potential downtimes. Choosing the Right Cloud Services Choosing the right cloud services is crucial in developing an efficient multi cloud strategy. Register now for free and experience the seamless operation of your databases across multi-cloud and hybrid-cloud systems.

Scalegrid

JANUARY 12, 2024

Simply put, it’s the set of computational tasks that cloud systems perform, such as hosting databases, enabling collaboration tools, or running compute-intensive algorithms. This article analyzes cloud workloads, delving into their forms, functions, and how they influence the cost and efficiency of your cloud infrastructure.

Scalegrid

MARCH 14, 2024

Understanding Hybrid Cloud Strategy A hybrid cloud merges the capabilities of public and private clouds into a singular, coherent system. In practice, a hybrid cloud operates by melding resources and services from multiple computing environments, which necessitates effective coordination, orchestration, and integration to work efficiently.

Dynatrace

DECEMBER 15, 2022

This transition to public, private, and hybrid cloud is driving organizations to automate and virtualize IT operations to lower costs and optimize cloud processes and systems. Besides the traditional system hardware, storage, routers, and software, ITOps also includes virtual components of the network and cloud infrastructure.

Percona

APRIL 17, 2023

In this blog post, we will discuss the best practices on the MongoDB ecosystem applied at the Operating System (OS) and MongoDB levels. Operating System (OS) settings Swappiness Swappiness is a Linux kernel setting that influences the behavior of the Virtual Memory manager when it needs to allocate a swap, ranging from 0-100.

ACM Sigarch

MAY 31, 2023

Introduction Memory systems are evolving into heterogeneous and composable architectures. Heterogeneous and Composable Memory (HCM) offers a feasible solution for terabyte- or petabyte-scale systems, addressing the performance and efficiency demands of emerging big-data applications. The recently announced CXL3.0

Dynatrace

APRIL 5, 2021

The 2014 launch of AWS Lambda marked a milestone in how organizations use cloud services to deliver their applications more efficiently, by running functions at the edge of the cloud without the cost and operational overhead of on-premises servers. Dynatrace news. What is AWS Lambda? Where does Lambda fit in the AWS ecosystem?

The Netflix TechBlog

OCTOBER 26, 2021

False negatives are closely related to the statistical concept of power , which gives the probability of a true positive given the experimental design and a true effect of a specific size. As a result, if the test treatment results in a small reduction in the latency metric, it’s hard to successfully identify?

Brendan Gregg

FEBRUARY 28, 2023

This talk originated from my updates to [Systems Performance 2nd Edition], and this was the first time I've given this talk in person! CXL in a way allows a custom memory controller to be added to a system, to increase memory capacity, bandwidth, and overall performance. Ford, et al., “TCP

The Netflix TechBlog

JUNE 4, 2019

Because microprocessors are so fast, computer architecture design has evolved towards adding various levels of caching between compute units and the main memory, in order to hide the latency of bringing the bits to the brains. This avoids thrashing caches too much for B and evens out the pressure on the L3 caches of the machine.

The Morning Paper

NOVEMBER 5, 2019

File systems unfit as distributed storage backends: lessons from 10 years of Ceph evolution Aghayev et al., In this case, the assumption that a distributed storage backend should clearly be layered on top of a local file system. A distributed file system provides a unified view over aggregated storage from multiple physical machines.

Adrian Cockcroft

JANUARY 20, 2023

on Myths and Legends of High Performance Computing — it’s a somewhat light-hearted look at some of the same issues by the leader of the team that built the Fugaku system I mention below. Jack Dongarra talked about the scores, and pointed out the low efficiency on some important workloads. Next generation architectures will use CXL3.0

Dynatrace

OCTOBER 1, 2021

As dynamic systems architectures increase in complexity and scale, IT teams face mounting pressure to track and respond to conditions and issues across their multi-cloud environments. An advanced observability solution can also be used to automate more processes, increasing efficiency and innovation among Ops and Apps teams.

The Netflix TechBlog

OCTOBER 19, 2020

which is difficult when troubleshooting distributed systems. Now let’s look at how we designed the tracing infrastructure that powers Edgar. Troubleshooting a session in Edgar When we started building Edgar four years ago, there were very few open-source distributed tracing systems that satisfied our needs.

Dynatrace

JANUARY 18, 2023

This methodology aims to improve software system reliability using several key categories such as availability, performance, latency, efficiency, capacity, and incident response. DevOps is a tactical approach to creating and delivering software designed to close the gap between development and IT operations.

Percona

DECEMBER 11, 2023

A Dedicated Log Volume (DLV) is a specialized storage volume designed to house database transaction logs separately from the volume containing the database tables. This separation aims to streamline transaction write logging, improving efficiency and consistency.

The Netflix TechBlog

SEPTEMBER 8, 2020

For each route we migrated, we wanted to make sure we were not introducing any regressions: either in the form of missing (or worse, wrong) data, or by increasing the latency of each endpoint. Being able to canary a new route let us verify latency and error rates were within acceptable limits. This meant that data that was static (e.g.

The Morning Paper

NOVEMBER 10, 2019

It’s been clear for a while that software designed explicitly for the data center environment will increasingly want/need to make different design trade-offs to e.g. general-purpose systems software that you might install on your own machines. The desire for CPU efficiency and lower latencies is easy to understand.

Dynatrace

JANUARY 13, 2022

Analyzing a clinician’s clickstream when using an electronic medical record system to better improve the efficiency of data entry. Providing insight into the service latency to help developers identify poorly performing code. This can help to identify performance issues before too many users experience them.

The Morning Paper

FEBRUARY 6, 2020

’ Stateless is fine until you need state, at which point the coarse-grained solutions offered by current platforms limit the kinds of application designs that work well. On the Cloudburst design teams’ wish list: A running function’s ‘hot’ data should be kept physically nearby for low-latency access.

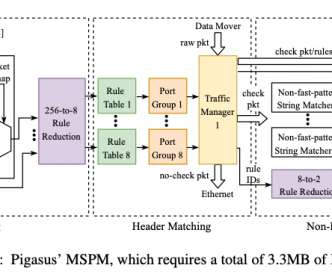

The Morning Paper

NOVEMBER 15, 2020

This stems from a combination of Jevon’s paradox and the interconnectedness of systems – doing more in one area often leads to a need for more elsewhere too. At the end of the day, there are three basic ways we can increase capacity: Increasing the number of units in a system (subject to Amdahl’s law ). IDS/IPS requirements.

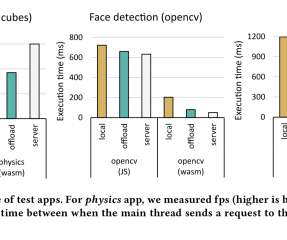

The Morning Paper

JANUARY 30, 2020

Edge servers are the middle ground – more compute power than a mobile device, but with latency of just a few ms. The Mobile Web Worker (MWW) System. The current system assumes an application specific regression model is available on the servers which can predict processing time given the current parameters of the job (e.g.

The Morning Paper

OCTOBER 26, 2020

On the surface this is a paper about fast data ingestion from high-volume streams, with indexing to support efficient querying. As a production system within Microsoft capturing around a quadrillion events and indexing 16 trillion search keys per day it would be interesting in its own right, but there’s a lot more to it than that.

The Netflix TechBlog

NOVEMBER 22, 2019

4:45pm-5:45pm NFX 202 A day in the life of a Netflix Engineer Dave Hahn , SRE Engineering Manager Abstract : Netflix is a large, ever-changing ecosystem serving millions of customers across the globe through cloud-based systems and a globally distributed CDN. We explore all the systems necessary to make and stream content from Netflix.

The Netflix TechBlog

NOVEMBER 22, 2019

4:45pm-5:45pm NFX 202 A day in the life of a Netflix Engineer Dave Hahn , SRE Engineering Manager Abstract : Netflix is a large, ever-changing ecosystem serving millions of customers across the globe through cloud-based systems and a globally distributed CDN. We explore all the systems necessary to make and stream content from Netflix.

Dynatrace

APRIL 26, 2022

Digital experience monitoring enables companies to respond to issues more efficiently in real time, and, through enrichment with the right business data, understand how end-user experience of their digital products significantly affects business key performance indicators (KPIs). Endpoint monitoring (EM). Endpoints can be physical (i.e.,

The Morning Paper

SEPTEMBER 10, 2019

Google already has Dremel , Mesa , Photon , F1 , PowerDrill , and Spanner , so why did they need yet another data processing system? Because they had too many data processing systems! ;). When each of those use cases is powered by a dedicated back-end, investments in better performance, improved scalability and efficiency etc.

The Morning Paper

MAY 19, 2019

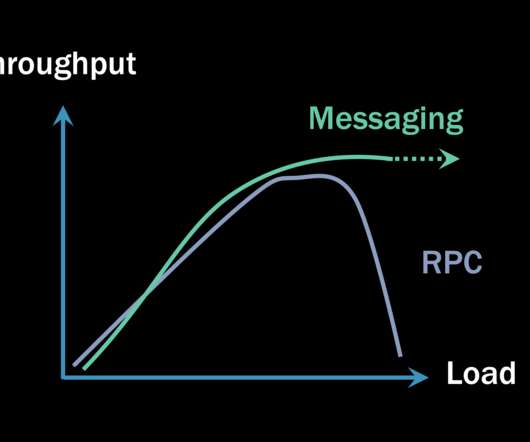

Last week we learned about the [increased tail-latency sensitivity of microservices based applications with high RPC fan-outs. Seer uses estimates of queue depths to mitigate latency spikes on the order of 10-100ms, in conjunction with a cluster manager. So what we have here is a glimpse of the limits for low-latency RPCs under load.

Particular Software

SEPTEMBER 20, 2021

Some will claim that any type of RPC communication ends up being faster (meaning it has lower latency) than any equivalent invocation using asynchronous messaging. There are more steps, so the increased latency is easily explained. Garbage collectors are designed under the assumption that memory should be cleaned up reasonably quickly.

Brendan Gregg

FEBRUARY 28, 2023

This talk originated from my updates to Systems Performance 2nd Edition , and this was the first time I've given this talk in person! CXL in a way allows a custom memory controller to be added to a system, to increase memory capacity, bandwidth, and overall performance. Ford, et al., “TCP

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content