The Three Cs: Concatenate, Compress, Cache

CSS Wizardry

OCTOBER 16, 2023

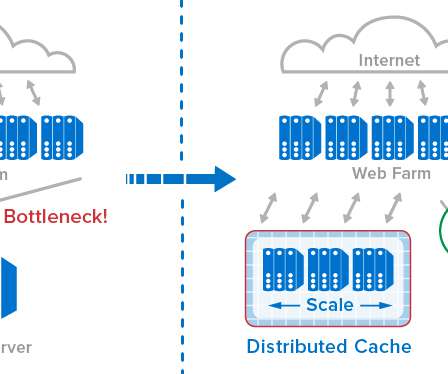

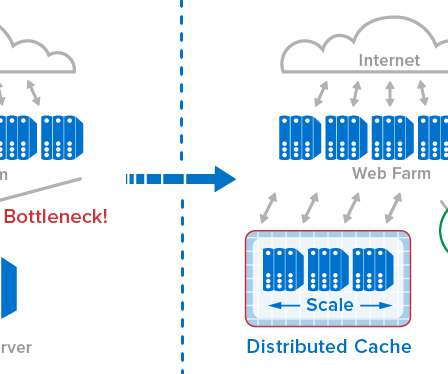

Concatenating our files on the server: Are we going to send many smaller files, or are we going to send one monolithic file? Compressing them over the network: Which compression algorithm, if any, will we use? Caching them at the other end: How long should we cache files on a user’s device? Cache This is the easy one.

Let's personalize your content