Supporting Diverse ML Systems at Netflix

The Netflix TechBlog

MARCH 7, 2024

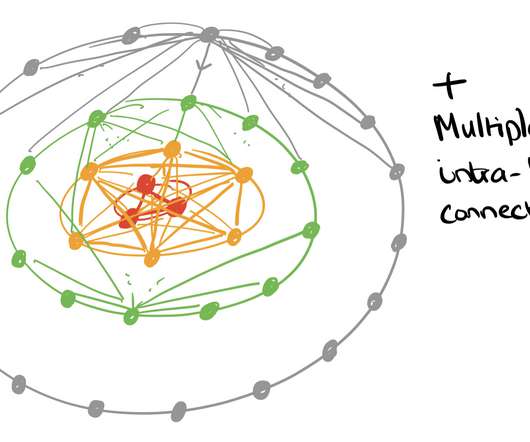

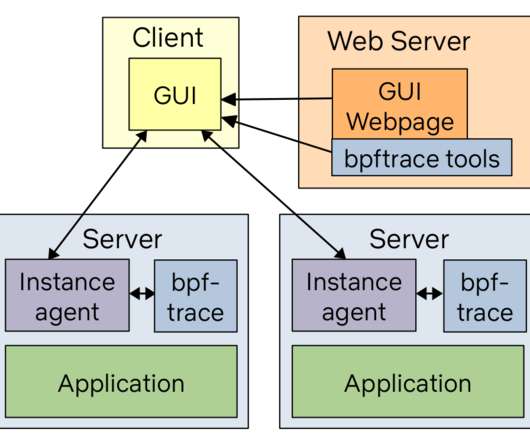

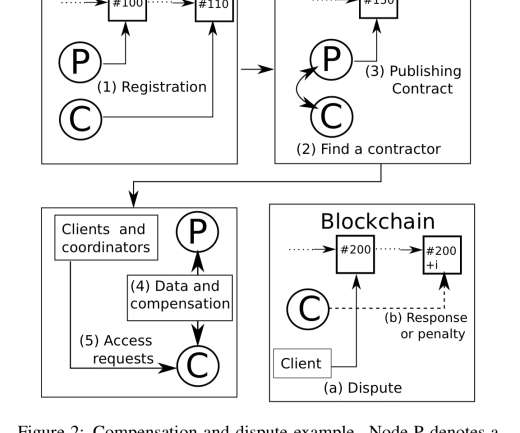

The Machine Learning Platform (MLP) team at Netflix provides an entire ecosystem of tools around Metaflow , an open source machine learning infrastructure framework we started, to empower data scientists and machine learning practitioners to build and manage a variety of ML systems.

Let's personalize your content