Why applying chaos engineering to data-intensive applications matters

Dynatrace

MAY 23, 2024

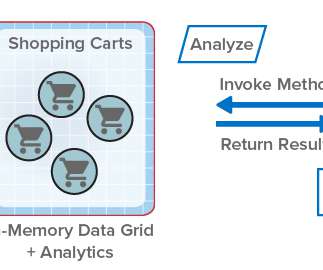

Such frameworks support software engineers in building highly scalable and efficient applications that process continuous data streams of massive volume. Stream processing systems, designed for continuous, low-latency processing, demand swift recovery mechanisms to tolerate and mitigate failures effectively.

Let's personalize your content