Bending pause times to your will with Generational ZGC

The Netflix TechBlog

MARCH 5, 2024

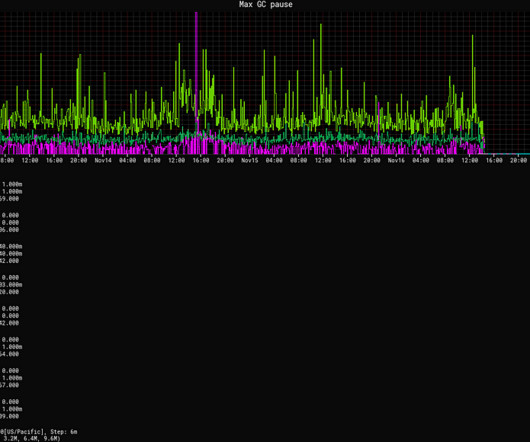

Reduced tail latencies In both our GRPC and DGS Framework services, GC pauses are a significant source of tail latencies. In fact, we’ve found for our services and architecture that there is no such trade off. No explicit tuning has been required to achieve these results.

Let's personalize your content