Mastering Hybrid Cloud Strategy

Scalegrid

MARCH 14, 2024

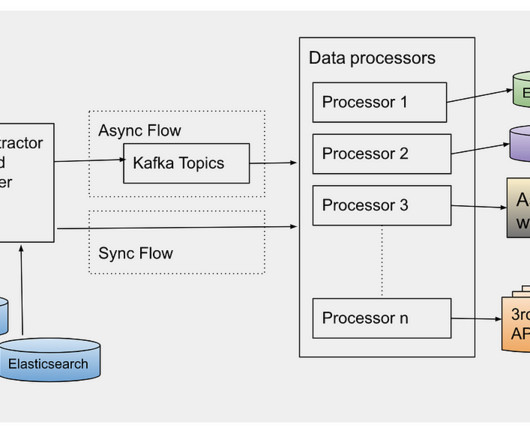

Mastering Hybrid Cloud Strategy Are you looking to leverage the best private and public cloud worlds to propel your business forward? A hybrid cloud strategy could be your answer. Understanding Hybrid Cloud Strategy A hybrid cloud merges the capabilities of public and private clouds into a singular, coherent system.

Let's personalize your content