Redis vs Memcached in 2024

Scalegrid

MARCH 28, 2024

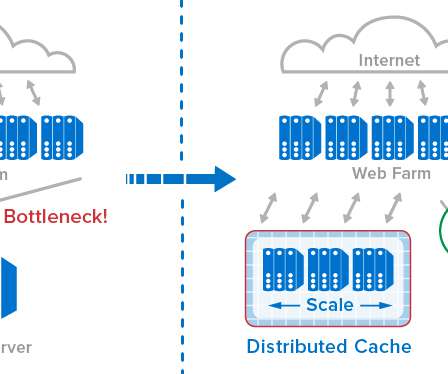

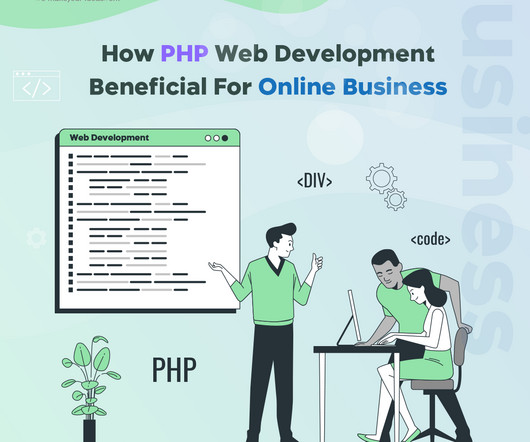

In this comparison of Redis vs Memcached, we strip away the complexity, focusing on each in-memory data store’s performance, scalability, and unique features. Redis is better suited for complex data models, and Memcached is better suited for high-throughput, string-based caching scenarios.

Let's personalize your content