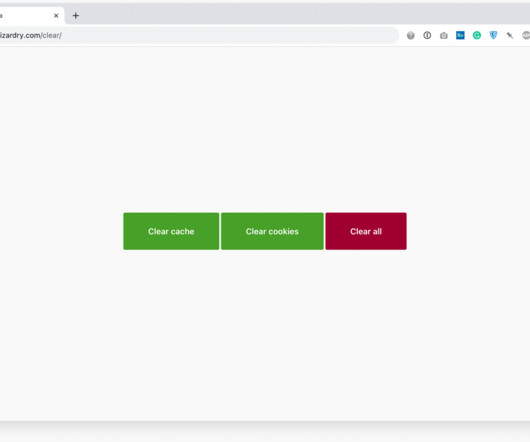

How to Clear Cache and Cookies on a Customer’s Device

CSS Wizardry

OCTOBER 2, 2023

If you work in customer support for any kind of tech firm, you’re probably all too used to talking people through the intricate, tedious steps of clearing their cache and clearing their cookies. From identifying their operating system, platform, and browser, to trying to guide them—invisibly! Well, there’s an easier way!

Let's personalize your content