Why applying chaos engineering to data-intensive applications matters

Dynatrace

MAY 23, 2024

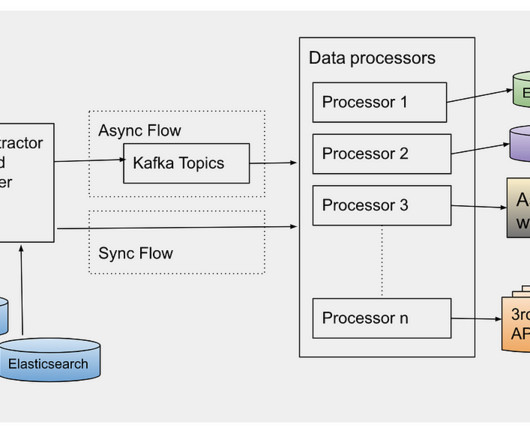

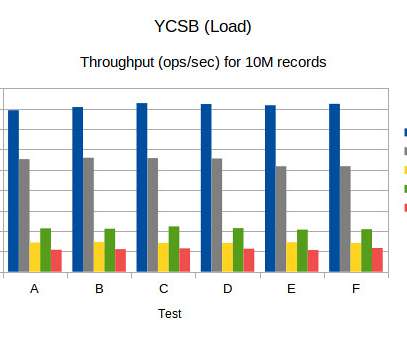

The jobs executing such workloads are usually required to operate indefinitely on unbounded streams of continuous data and exhibit heterogeneous modes of failure as they run over long periods. This significantly increases event latency. Performance is usually a primary concern when using stream processing frameworks.

Let's personalize your content