ETL Workflow Modeling

Abhishek Tiwari

APRIL 14, 2018

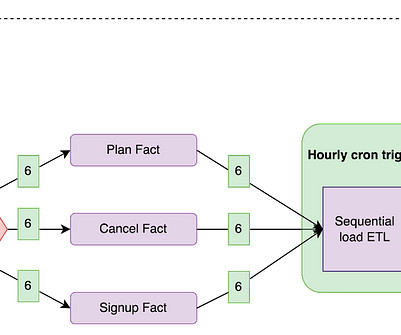

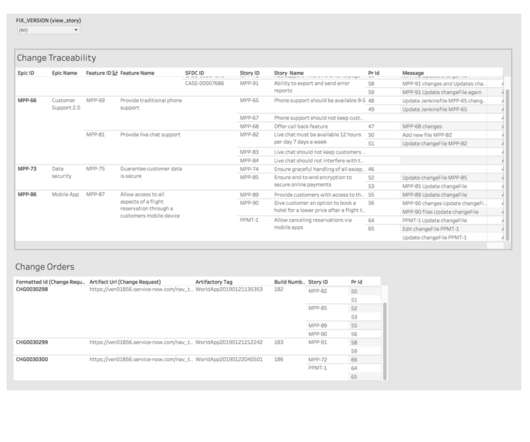

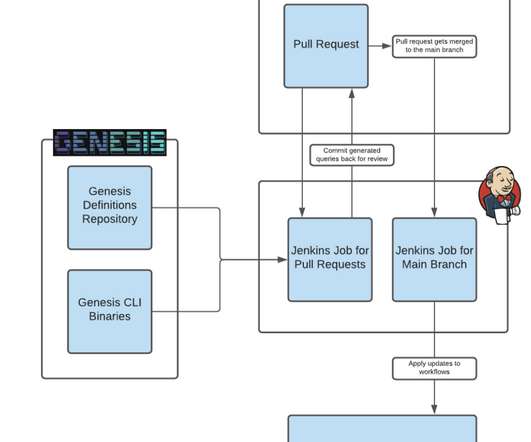

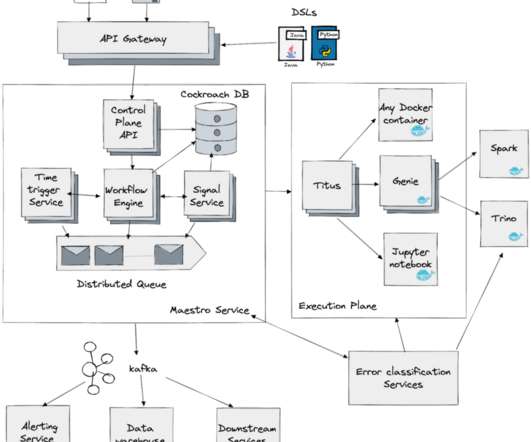

Developing Extract–transform–load (ETL) workflow is a time-consuming activity yet a very important component of data warehousing process. The process to develop ETL workflow is often ad-hoc, complex, trial and error based. It has been suggested that formal modeling of ETL process can alleviate most of these pain points.

Let's personalize your content