Migrating Critical Traffic At Scale with No Downtime?—?Part 2

The Netflix TechBlog

JUNE 13, 2023

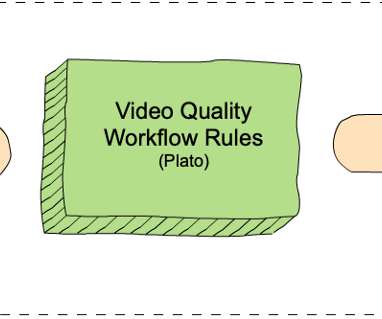

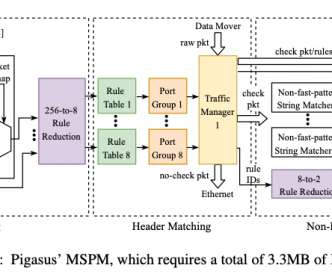

Migrating Critical Traffic At Scale with No Downtime — Part 2 Shyam Gala , Javier Fernandez-Ivern , Anup Rokkam Pratap , Devang Shah Picture yourself enthralled by the latest episode of your beloved Netflix series, delighting in an uninterrupted, high-definition streaming experience. This is where large-scale system migrations come into play.

Let's personalize your content