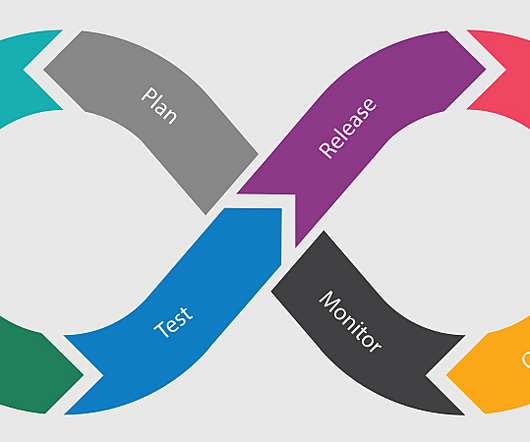

Medallion Architecture: Efficient Batch and Stream Processing Data Pipelines With Azure Databricks and Delta Lake

DZone

JULY 13, 2023

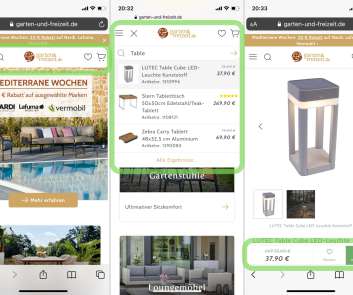

In today's data-driven world, organizations need efficient and scalable data pipelines to process and analyze large volumes of data. Medallion Architecture provides a framework for organizing data processing workflows into different zones, enabling optimized batch and stream processing.

Let's personalize your content