Migrating Critical Traffic At Scale with No Downtime?—?Part 1

The Netflix TechBlog

MAY 4, 2023

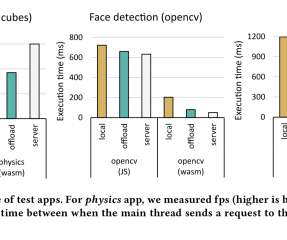

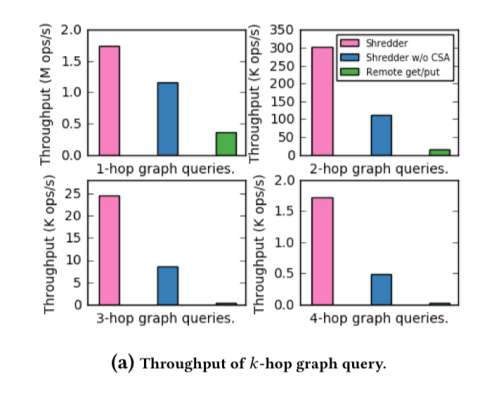

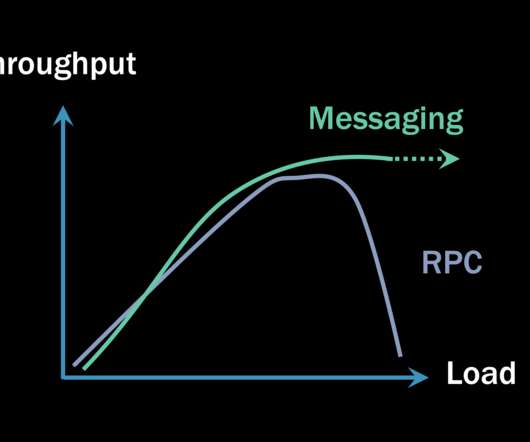

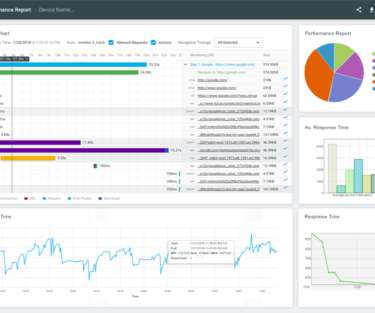

It provides a good read on the availability and latency ranges under different production conditions. These include options where replay traffic generation is orchestrated on the device, on the server, and via a dedicated service. Also, since this logic resides on the server side, we can iterate on any required changes faster.

Let's personalize your content