Architectural Insights: Designing Efficient Multi-Layered Caching With Instagram Example

DZone

FEBRUARY 27, 2024

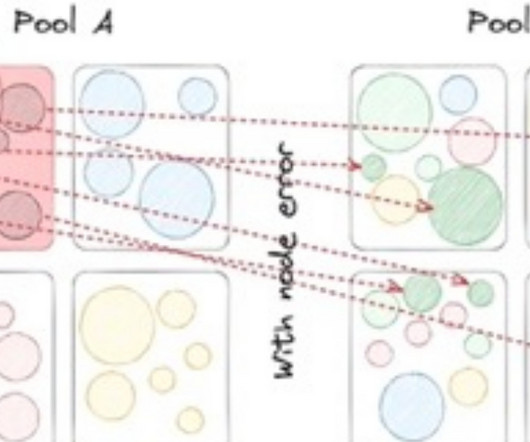

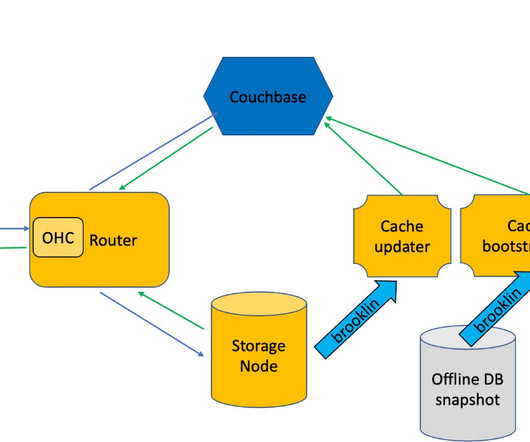

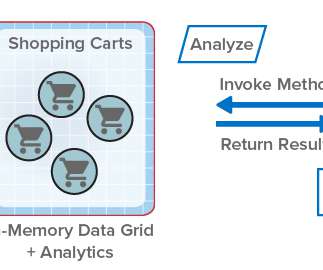

Caching is a critical technique for optimizing application performance by temporarily storing frequently accessed data, allowing for faster retrieval during subsequent requests. Multi-layered caching involves using multiple levels of cache to store and retrieve data.

Let's personalize your content