Crucial Redis Monitoring Metrics You Must Watch

Scalegrid

JANUARY 25, 2024

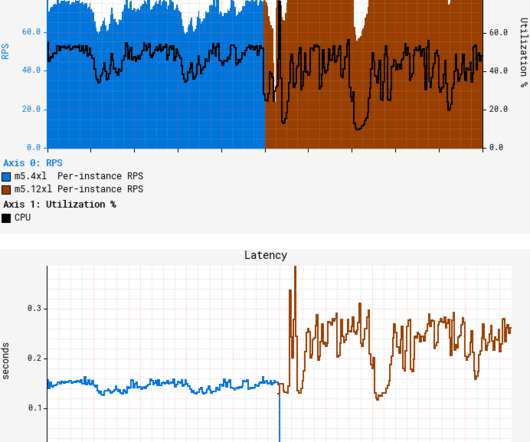

Key Takeaways Critical performance indicators such as latency, CPU usage, memory utilization, hit rate, and number of connected clients/slaves/evictions must be monitored to maintain Redis’s high throughput and low latency capabilities. It can achieve impressive performance, handling up to 50 million operations per second.

Let's personalize your content