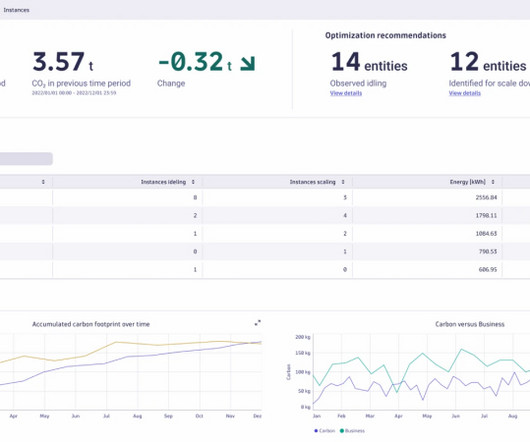

IT carbon footprint: Dynatrace Carbon Impact and Optimization app helps organizations measure cloud computing carbon footprint

Dynatrace

SEPTEMBER 21, 2023

As global warming advances, growing IT carbon footprints are pushing energy-efficient computing to the top of many organizations’ priority lists. Energy efficiency is a key reason why organizations are migrating workloads from energy-intensive on-premises environments to more efficient cloud platforms.

Let's personalize your content