Achieving 100Gbps intrusion prevention on a single server

The Morning Paper

NOVEMBER 15, 2020

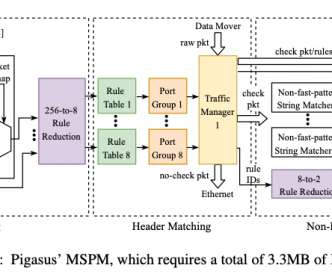

This makes the whole system latency sensitive. So we need low latency, but we also need very high throughput: A recurring theme in IDS/IPS literature is the gap between the workloads they need to handle and the capabilities of existing hardware/software implementations. The target FPGA for Pigasus has 16MB of BRAM.

Let's personalize your content