Predictive CPU isolation of containers at Netflix

The Netflix TechBlog

JUNE 4, 2019

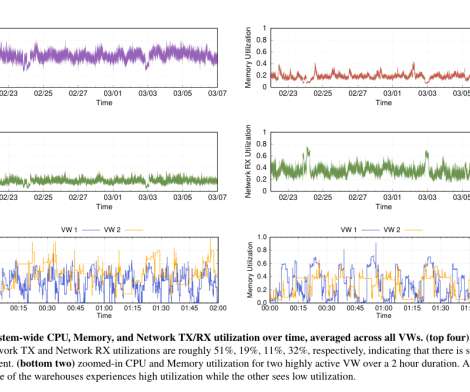

Because microprocessors are so fast, computer architecture design has evolved towards adding various levels of caching between compute units and the main memory, in order to hide the latency of bringing the bits to the brains. This avoids thrashing caches too much for B and evens out the pressure on the L3 caches of the machine.

Let's personalize your content