In-Stream Big Data Processing

Highly Scalable

AUGUST 20, 2013

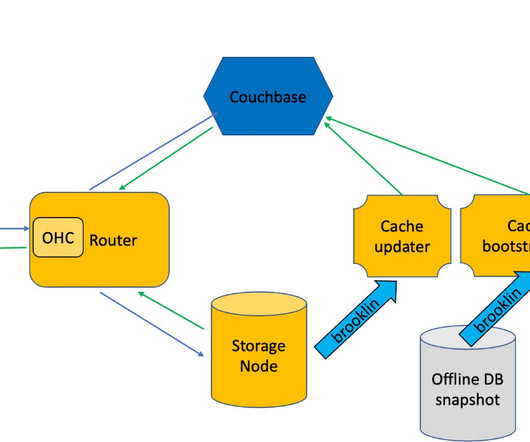

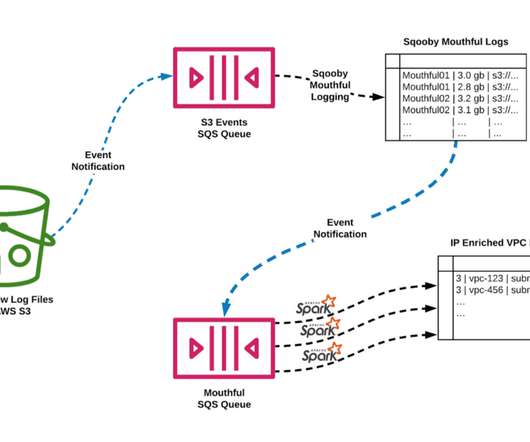

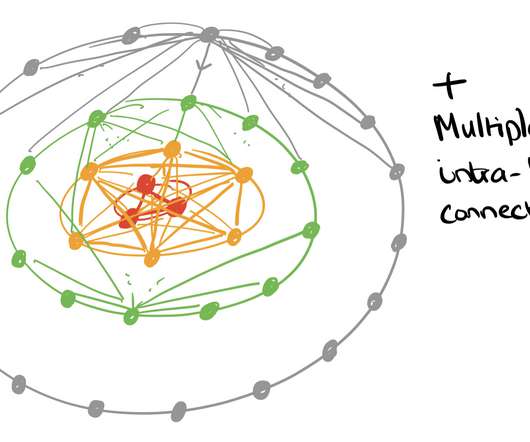

The shortcomings and drawbacks of batch-oriented data processing were widely recognized by the Big Data community quite a long time ago. This system has been designed to supplement and succeed the existing Hadoop-based system that had too high latency of data processing and too high maintenance costs.

Let's personalize your content