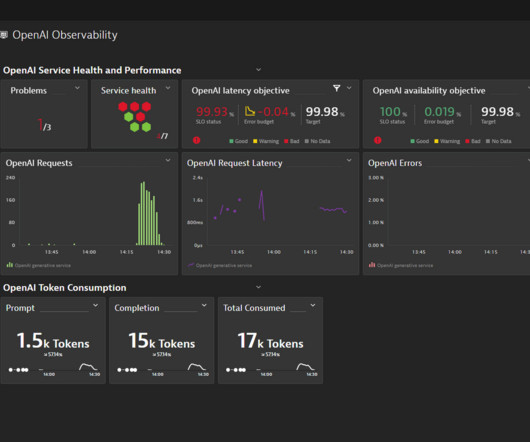

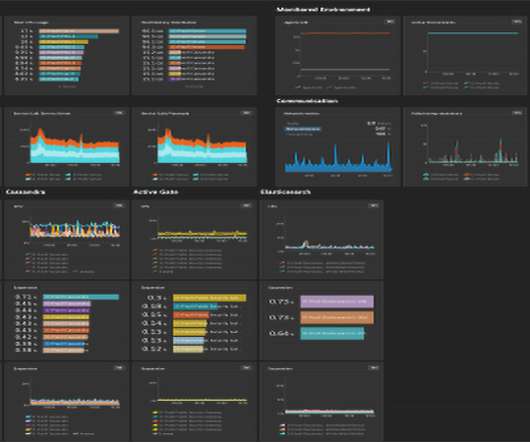

Who will watch the watchers? Extended infrastructure observability for WSO2 API Manager

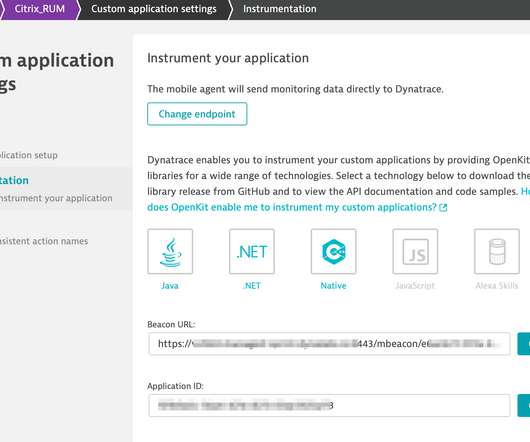

Dynatrace

SEPTEMBER 18, 2020

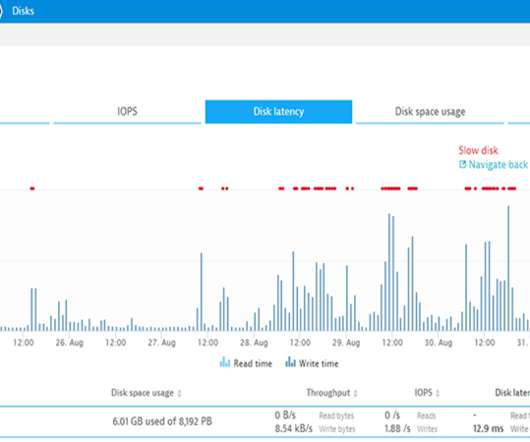

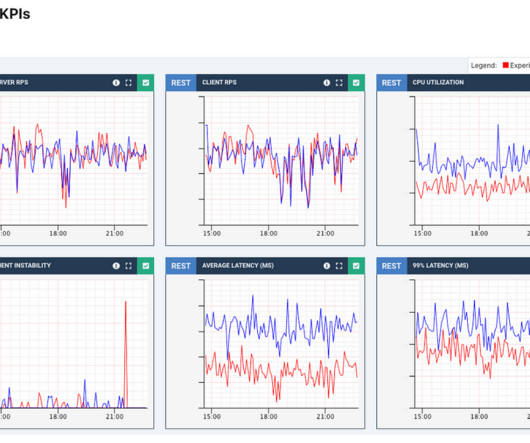

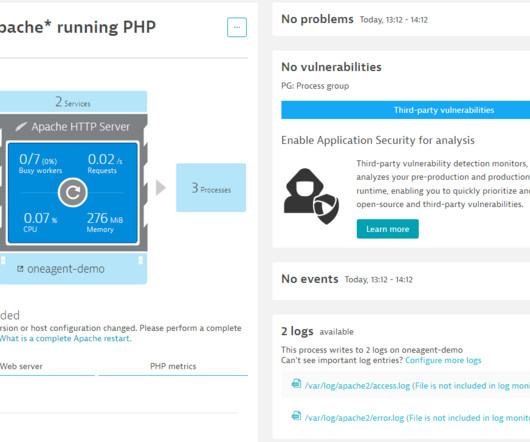

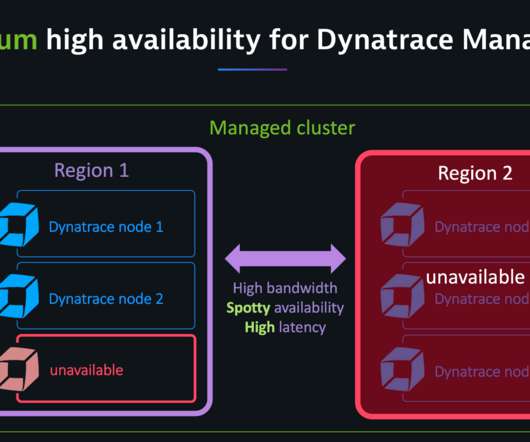

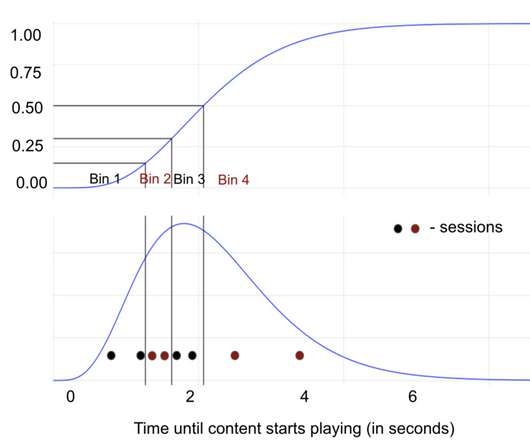

Sure, cloud infrastructure requires comprehensive performance visibility, as Dynatrace provides , but the services that leverage cloud infrastructures also require close attention. Extend infrastructure observability to WSO2 API Manager. High latency or lack of responses. Soaring number of active connections.

Let's personalize your content