Migrating Critical Traffic At Scale with No Downtime?—?Part 1

The Netflix TechBlog

MAY 4, 2023

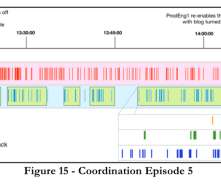

Replay Traffic Testing Replay traffic refers to production traffic that is cloned and forked over to a different path in the service call graph, allowing us to exercise new/updated systems in a manner that simulates actual production conditions. It helps expose memory leaks, deadlocks, caching issues, and other system issues.

Let's personalize your content