Migrating Critical Traffic At Scale with No Downtime?—?Part 1

The Netflix TechBlog

MAY 4, 2023

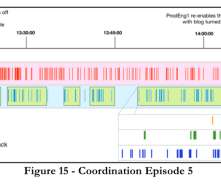

This blog series will examine the tools, techniques, and strategies we have utilized to achieve this goal. In this testing strategy, we execute a copy (replay) of production traffic against a system’s existing and new versions to perform relevant validations. This approach has a handful of benefits.

Let's personalize your content