Designing Instagram

High Scalability

JANUARY 11, 2022

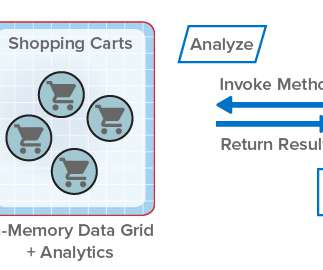

Design a photo-sharing platform similar to Instagram where users can upload their photos and share it with their followers. High Level Design. Component Design. API Design. We have provided the API design of posting an image on Instagram below. API Design. Problem Statement. Architecture. Fetching User Feed.

Let's personalize your content