AWS observability: AWS monitoring best practices for resiliency

Dynatrace

NOVEMBER 22, 2021

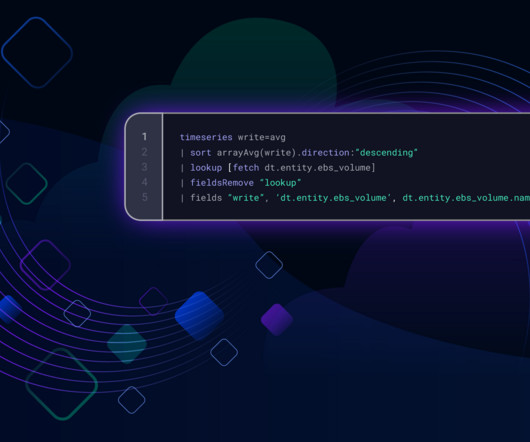

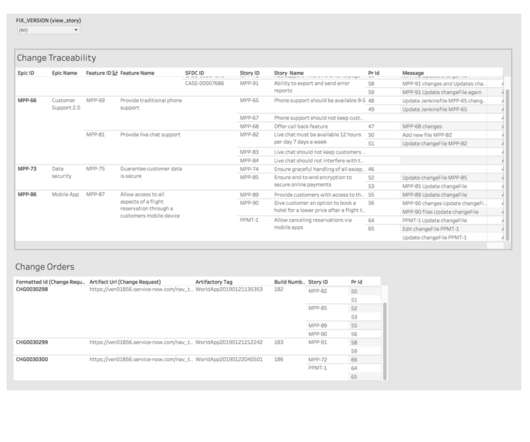

These challenges make AWS observability a key practice for building and monitoring cloud-native applications. Let’s take a closer look at what observability in dynamic AWS environments means, why it’s so important, and some AWS monitoring best practices. AWS monitoring best practices. Amazon EC2.

Let's personalize your content