Implementing service-level objectives to improve software quality

Dynatrace

DECEMBER 27, 2022

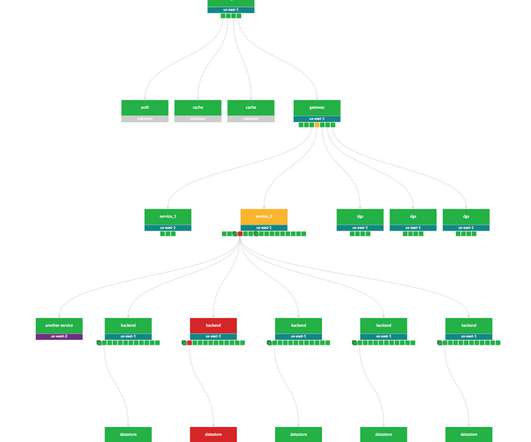

Instead, they can ensure that services comport with the pre-established benchmarks. Using data from Dynatrace and its SLO wizard , teams can easily benchmark meaningful, user-based reliability measurements and establish error budgets to implement SLOs that meet business objectives and drive greater DevOps automation. Reliability.

Let's personalize your content