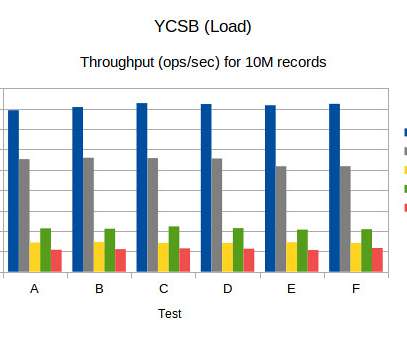

Benchmark (YCSB) numbers for Redis, MongoDB, Couchbase2, Yugabyte and BangDB

High Scalability

FEBRUARY 17, 2021

This article is to simply report the YCSB bench test results in detail for five NoSQL databases namely Redis, MongoDB, Couchbase, Yugabyte and BangDB and compare the result side by side. I have also used the default six test scenarios as defined by the YCSB framework. I have restricted it to 10M records for each test.

Let's personalize your content