Plan Your Multi Cloud Strategy

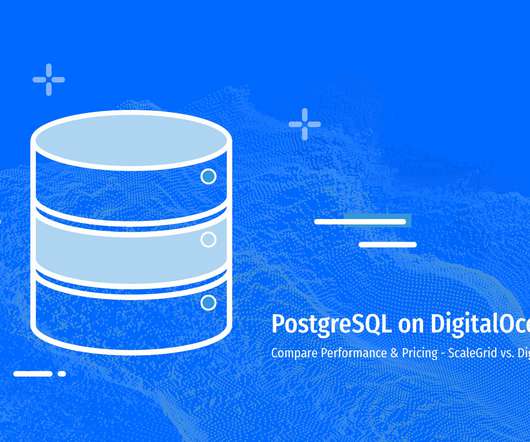

Scalegrid

MARCH 22, 2024

A well-planned multi cloud strategy can seriously upgrade your business’s tech game, making you more agile. Key Takeaways Multi-cloud strategies have become increasingly popular due to the need for flexibility, innovation, and the avoidance of vendor lock-in. They can also bolster uptime and limit latency issues or potential downtimes.

Let's personalize your content