Site reliability done right: 5 SRE best practices that deliver on business objectives

Dynatrace

MAY 31, 2023

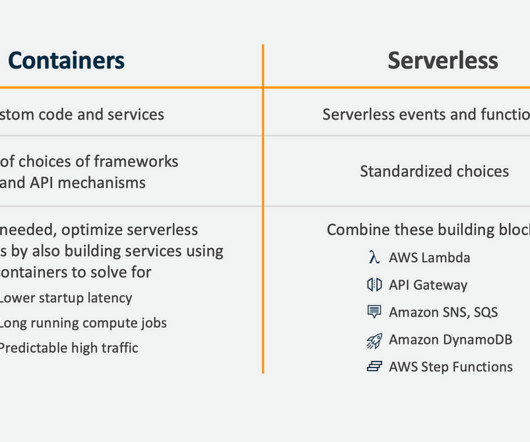

Microservices-based architectures and software containers enable organizations to deploy and modify applications with unprecedented speed. Aligning site reliability goals with business objectives Because of this, SRE best practices align objectives with business outcomes.

Let's personalize your content