Bandwidth or Latency: When to Optimise for Which

CSS Wizardry

JANUARY 31, 2019

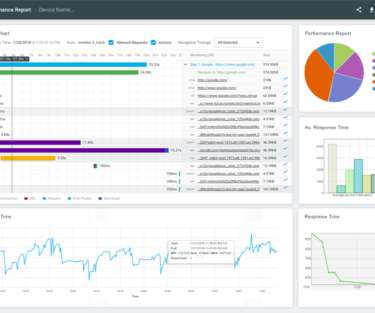

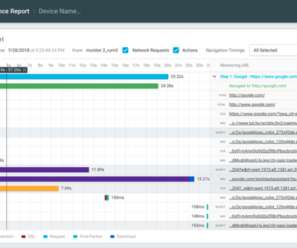

When it comes to network performance, there are two main limiting factors that will slow you down: bandwidth and latency. Latency is defined as…. Where bandwidth deals with capacity, latency is more about speed of transfer 2. and reduction in latency. and reduction in latency. Bandwidth is defined as….

Let's personalize your content