Optimize your environment: Unveiling Dynatrace Hyper-V extension for enhanced performance and efficient troubleshooting

Dynatrace

OCTOBER 23, 2023

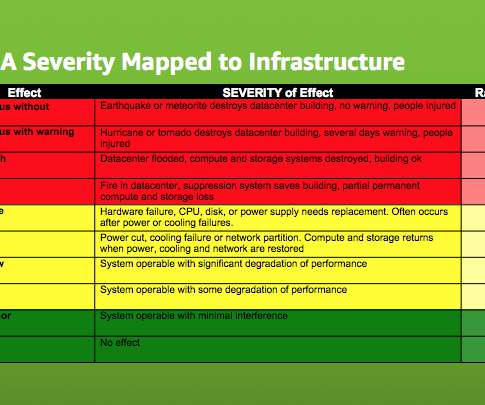

It enables multiple operating systems to run simultaneously on the same physical hardware and integrates closely with Windows-hosted services. Firstly, managing virtual networks can be complex as networking in a virtual environment differs significantly from traditional networking.

Let's personalize your content